Over the weekend, Google made changes to its privacy statement, making it clear that it retains the right to scrape virtually everything you put online in order to develop its AI technologies. If Google is able to read your words, presume that they now belong to the firm and that they are nesting inside a chatbot.

The revised Google policy states that “Google uses the information to improve our services and to develop new products, features, and technologies that benefit our users and the public.” To train Google’s AI models, for instance, and create features and products like Google Translate, Bard, and Cloud AI capabilities, we leverage publicly available information.

Google keeps track of all services

Google, fortunately, keeps track of all of the times its terms of service have changed. The new phrasing clarifies additional ways that the internet giant’s AI systems might utilize your online musings, amending a previous policy. The former policy just referenced Google Translate; now Bard and Cloud AI are listed where previously Google claimed the data will be used “for language models,” rather than “AI models.”

This provision in a privacy policy is unusual. These policies often outline how a firm utilizes the data you post on the company’s own services. In this case, it appears that Google reserves the right to collect and use any publicly available data as if the internet as a whole were its own artificial intelligence (AI) testing field. An inquiry for comment from Google did not immediately receive a response.

Chatbots repeating some humonculoid version of words

The practice brings up fresh and intriguing privacy issues. Most people are aware that public posts are just that—public. However, you now require a fresh conceptualization of what it means to publish something online. Who can see the information is no longer relevant; what matters is how it might be used. There’s a strong probability that Bard and ChatGPT absorbed your 15-year-old restaurant evaluations or long-forgotten blog pieces. The chatbots may be repeating some humonculoid version of your words while you read this in ways that are unpredictable and challenging to comprehend.

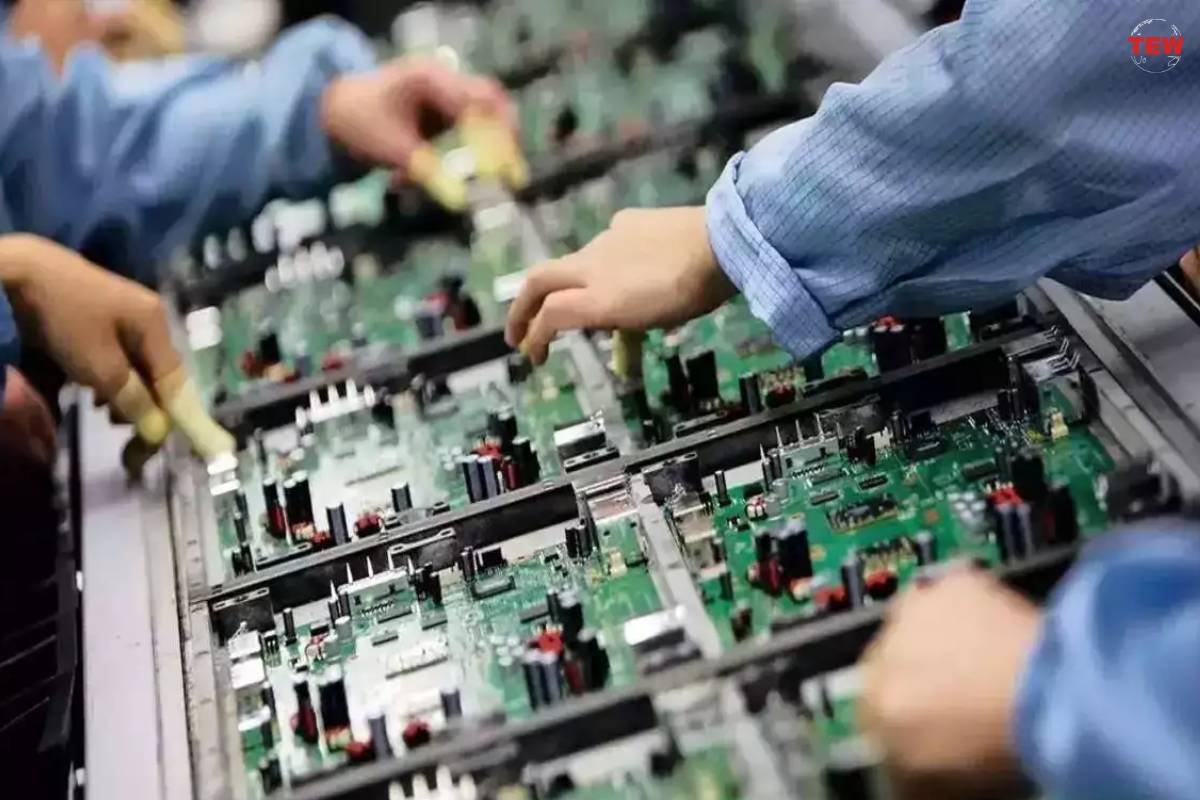

Where data-hungry chatbots got their information is one of the less obvious problems in the post-ChatGPT era. To feed their robot addictions, businesses like Google and OpenAI scraped large parts of the internet. It’s not at all apparent whether this is legal, and over the next few years, the courts will have to deal with copyright issues that, only a few years ago, would have sounded science fiction. The phenomenon is already having some unforeseen effects on customers in the meantime.

Disabled the free usage of their APIs

Twitter and Reddit’s overlords, who are particularly irate about the AI issue, made contentious modifications to lock down their sites. Both businesses disabled the free usage of their APIs, which had previously permitted anyone to download copious amounts of posts.

The API modifications to Twitter and Reddit damaged third-party applications that many users relied on to access those websites. For a brief moment, it even appeared that Twitter would require public organisations, such as weather, transit, and emergency services, to pay if they wished to Tweet; however, the firm later pulled back the decision in the face of intense backlash.

Web scraping has become Elon Musk’s go-to bogeyman lately. Even when the problems appear unrelated, Musk cited the company’s need to prevent people from stealing data off his site for a number of recent Twitter mishaps. The quantity of tweets that users may view each day on Twitter was restricted over the weekend, making the service all but useless. It was a necessary reaction, according to Musk, to “data scraping” and “system manipulation.”